This article is more than two years old, the content may be outdated

What is Kubernetes?

A system for managing containerized applications across multiple hosts, providing basic mechanisms for deployment, maintenance, and scaling of applications.

Some examples of the possibilities of Kubernetes are:

- Automation of deployment and replication of containers.

- Scaling in or out containers on the fly.

- Group containers and provide load balancing between them.

- Simplicity on rolling out new versions of application containers on production, without downtime.

- The resilience of containers. If a container dies, it gets automatically replaced.

Kubernetes' Infrastructure

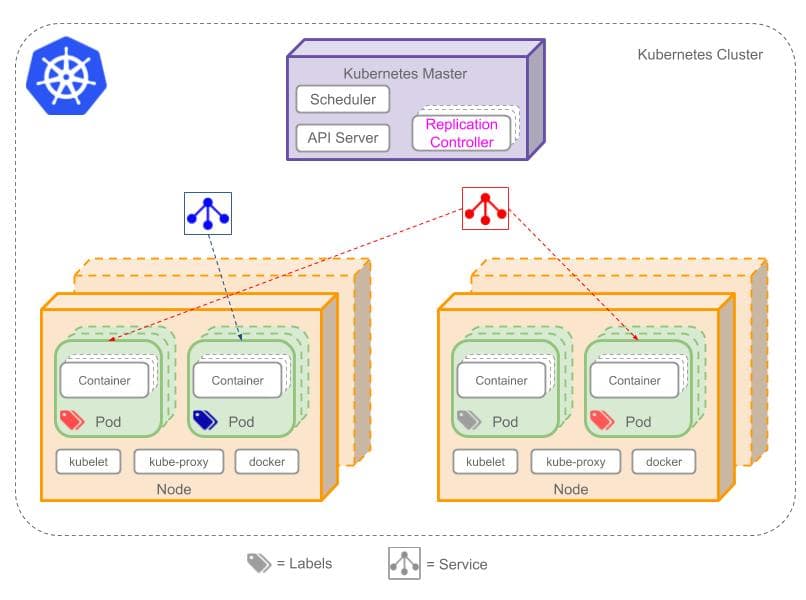

Basically, the core fundamental objects in the cluster are Pods, Services, Volumes, and Namespaces.

Pods

A pod is the basic building block in the system. Is the smallest unit in the object model. And this represents a running process in the cluster. This object encapsulates an application container (or multiple), storage resources, a unique network IP, and options to manage the container's behavior. The most common container runtime used is Docker.

And what is docker?

Well, docker is a platform to develop, deploy and run an application within containers. This concept leads to many advantages over the conventional way of developing applications. Having applications containerized let's you port the application to other environments with the same characteristics of the image hence making sure it will always work. An image is an executable package which contains everything needed to run your application: the code, libraries, configuration files, servers, etc. An image is a perfect environment for your application because it is configured programmatically via the Dockerfile defined by the developer. These packages only contain the dependencies specified and nothing else.

Isn't Docker and Kubernetes the same thing?

No. Docker is what helps you create the perfect environment for your application and Kubernetes is an orchestration platform which is responsible for making sure the deployments are successful, the application is always up by creating replicas and load balancing the requests. And by application I mean all the components that make your application a single unit. That is, for example, the front-end is made with Flask in python but some data is processed in another pod with a micro-service written in Go, and the images are stored in away from these two. So Kubernetes is the tool to allow your multiple containers work in harmony between them.

NOTE: Kubernetes supports other containerization platforms such as Rocket.

Networking

A pod has a unique IP address. Containers inside the pod talk with each other through localhost, because containers share the same namespace (IP and ports). But containers can communicate with other entities outside the Pod and the containers coordinate themselves on how they use the shared network resources.

Storage

A pod can have multiple storage volumes and containers can share data between those volumes.

A pod is not designed for durability, these objects are created only for the specific process given, this is if a node fails or something happens the pods are terminated, and controllers are the responsible for recreating the pods based on the template given.

Labels

Pods can have defined Labels. Which are key-value pairs and convey user-defined attributes. A clear advantage of Labels is that we can target pods by labels and apply them unique Services or Replication Controllers.

Services

As I said before, Pods are not durable and neither are designed for that. Pods are just instances of containers that may suddenly die, because of anything. So, let's actually imagine that we have a set of Pods which are responsible for a specific part of our app, and another set of Pods that are responsible for another part. How do any of them communicate with each other? Well, Pods are given a unique IP but this IP may change. And the pod that wants to communicate with other pods shouldn't be tracking those changes, we would be handing Pods a task that shouldn't be for them. That's where Services take part in.

Services define a logical set of pods and a policy by which to access them. Usually, pods are contained in a Service because they are defined to do so by a Label Selector. Services provide a stable virtual IP address (VIP). What this means is that it provides a traffic forwarding to one or more pods.

In summary, the service will create a stable unique IP address which will be visible to other objects that want communicate with it by the Label. And the service provides traffic forwarding incoming from outside and decide to which pods should be handed the incoming request.

Volumes

Volumes solve two main problems in Kubernetes. As Pods are volatile the files are not saved when the pod dies. It will start again in a clean state. And also when a pod has multiple containers they need to share files. So, Volumes are the solution for that.

They are directories accessible to all containers running in a Pod. And the data that is stored in a Pod is preserved after restarts.

However, there are several types of Volumes:

- node-local (

emptyDir) - file-sharing (

nfs) - cloud storage (Cloud providers like Azure or AWS.

awsElasticBlockStoreandazureDisk) - distributed file system (

glusterfs) - special purpose (

gitRepoandsecret)

There are more providers but they serve the same purpose stated in the list above.

Namespaces

Namespaces provide a scope for the object inside of it. It is considered a place where users work on it, and there are objects that are namespaced while others don't. With Namespaces, you can set up access control and resource quotas. Namespaces also serve to divide cluster resources between users.

Low-level objects like nodes and persistenVolumes are not in any namespace.

Resource quotas exist to limit the usage of resources of a cluster between users. So that a group of users is limited to use a certain amount of cpu usage for instance.

Namespaces are not that usual, it's actually for big teams.

Controllers

ReplicaSet

These Controllers are the supervisors for long-running pods. They initiate a specified number of pods (specified in configuration files or by command) and make sure those replicas (pods) keep always running. For instance, if a node or pod fails the replica set is in charge of creating a new one that replaces it.

However, it is recommended to use Deployments, instead of ReplicaSets, which are a higher-level concept that manages Replication Controllers and provides declarative updates to pods along with many more useful features.

Deployments

With Deployments, you described a state in a configuration file and it changes the actual state of pods to the desired one.

There are several use cases where Deployments take part in. For example, we would use Deployment Controllers to make the rollout of a new version of our application and kill the pods with the old version. An also in the reversed way, to move controllers with the new version to a previous one.

Deployment Controllers help us handling the scalability of our application in case of a heavy load.

Other controllers

There are other controllers, but the most used are the described above. The controllers left are:

- StatefulSets: they ensure that pods maintain a persistent identifier across any rescheduling. But the functionality is the same as Deployments.

- DaemonSets: ensures that all or some Nodes run a copy of a Pod.

- Jobs: ensures that a specified number of pods successfully terminate.

Ingress

It is interesting to note that a lot of new features in Kubernetes are still in beta, and that's the case of Ingress.

An Ingress is an api object that manages external access to the services in a cluster, typically HTTP.

The interesting part about Ingresses is that they provide load-balancing, SSL termination, and name-based virtual hosting.